Although I have not had much personal time lately, to finish the Pandamator code, I managed to move the workflow constraint checking forward, using LtlChecker as I promised in the relevant post. I’m not very happy with all the details — yet. Although I do call it ultimately from within C#, the work is done through F# code, so relying on parameter-less delegates, which in turn rely on mutable state, did not do me any good. I’m thinking of switching everything to use externally passed state, since using refs in F# is a sore sight indeed.

The reason I ended up using F# (except for the obvious reason that F# is more fun than C#) is that the code to generate workflow paths and interleave validation is very heavily recursive and intertwined. Meaning that the 130 lines of F# would have been God knows what, had they been written in C#.

In what follows, I have elided details about the Scenario and the ScenarioStep because they don’t matter for this post. The central point is function pathsFromNode. Don’t worry about the partial match on the list, I make sure the list starts non-empty.

let rec pathsFromNode (f:ScenarioStep -> ConstraintResult -> ConstraintResult) ((step,res) :: path) (scenario:Scenario) =

seq {

let trans = (transitionsFromNode scenario step) |> Seq.toList

if trans.Length = 0 then

let res1 = f step res //run f one more time to simulate extension to infinite path

yield (step,res1) :: path

else

for s in trans do

if not (List.exists (fun (x,_) -> x = s) path) then

let res1 = f s res

if res1.status <> Failed then

yield! (pathsFromNode f ((s,res1) :: (step,res) :: path) scenario)

else yield (s,res1) :: (step,res) :: path

}

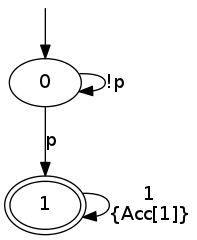

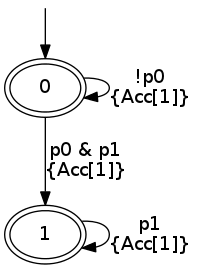

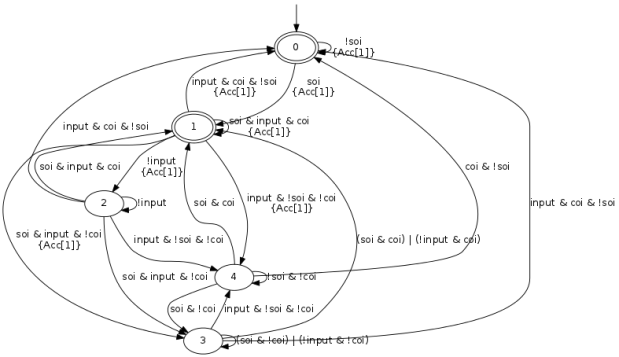

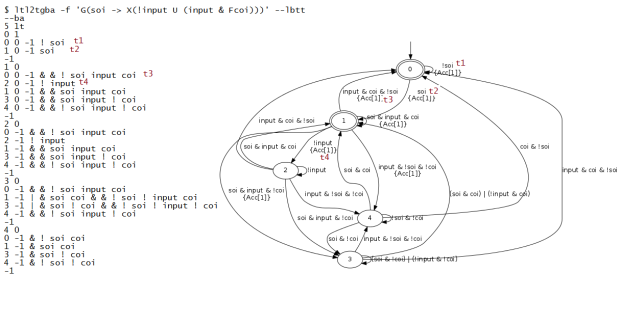

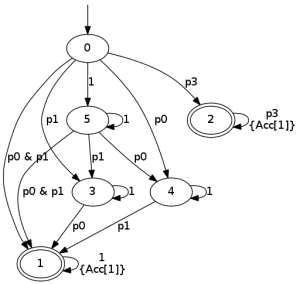

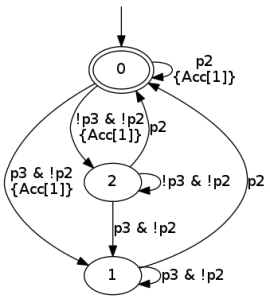

Note the line with the comment. It is necessary for the reason I explained in the last post (Büchi automata assuming infinite words). This function returns a sequence of paths, the generation of each path being able to end prematurely if the constraint validation fails along the way.

Since I am using LTL on single paths, I needed some way to extend this to all possible paths. I do this the easy way, using ∀ and ∃.

let checkForallNever51 (scenario:Scenario) (step:ScenarioStep) =

let step_ref = {new _step_ref with step=step}

let dic = new Dictionary<string,System.Func> ()

let p = Action(51)

dic.Add("p",(fun () -> evalPredicate step_ref.step p))

let con = create_constraint "G ! p" dic

let i0 = init con

if i0.status = Failed then

(false, Some [step, i0])

else

let checker (step:ScenarioStep) (res:ConstraintResult) =

step_ref.step forall_ce (fun ((s,res) :: _) -> res.status <> Failed && res.status <> CannotTerminate)

let checkNever51After52 (scenario:Scenario) =

let coisteps = scenario.steps |> List.filter (fun s -> s.actions |> List.exists (fun a -> a.id = 52))

coisteps |> forall_ce2 (checkForallNeverSoi scenario)

Never mind forall_ce, it is like forall but returns a counterexample if it fails (annotated boolean, if you like). There is also an exists_ce with the same return type, but it always returns None since failing does not produce any counterexample. And the forall_ce2 variant accepts a predicate that returns an annotated boolean, as well. I know, the names suck but I couldn’t think of anything better.

As you can see, I am selecting the start nodes and run a ∀ on each of them. In this example, I am not starting from entry nodes of the workflow.

In the next example, I am starting from entry nodes, but (in a perverse twist) I also use the constraint validation with a ∃ for filtering the start nodes.

let checkEventually52 quantifier (scenario:Scenario) (step:ScenarioStep) =

let step_ref = {new _step_ref with step=step}

let dic = new Dictionary<string,System.Func<bool>> ()

dic.Add("p",(fun () -> evalPredicate step_ref.step (Action(52))))

dic.Add("q",(fun () -> evalPredicate step_ref.step (Action(51))))

let con = create_constraint "F p & G ! q" dic

let i0 = init con

if i0.status = Failed then

(false, Some [step, i0])

else

let checker (step:ScenarioStep) (res:ConstraintResult) =

step_ref.step <- step

next res

let paths = pathsFromNode checker [(step,i0)] scenario

paths |> quantifier (fun ((s,res) :: _) -> res.status <> Failed && res.status <> CannotTerminate)

let checkForallEventually52 (scenario:Scenario) (step:ScenarioStep) =

checkEventuallyCoi forall_ce scenario step

let checkExistsEventually52 (scenario:Scenario) (step:ScenarioStep) =

checkEventuallyCoi exists_ce scenario step

let checkEventually52After52Event (scenario:Scenario) =

let coieventsteps = scenario.steps

|> List.filter (fun s ->

((stepHasInput s) && (fst (checkExistsEventually52 scenario s)))

|| (stepHasWebactionFor52 s))

coieventsteps |> forall_ce2 (checkForallEventually52 scenario)

The quantifier is a parameter of checkEventually52. Granted, it is not the cleaner code imaginable. It is also not comparable to what CTL* (or plain CTL — I remind you that CTL allows quantifiers before any occurrence of a modal operator in an expression, not just at top level) would be able to express. However, it has proven that it is possible to express constraints a lot more declaratively than my ad-hoc Prolog program did. What remains now, is to use it to express useful constraints.